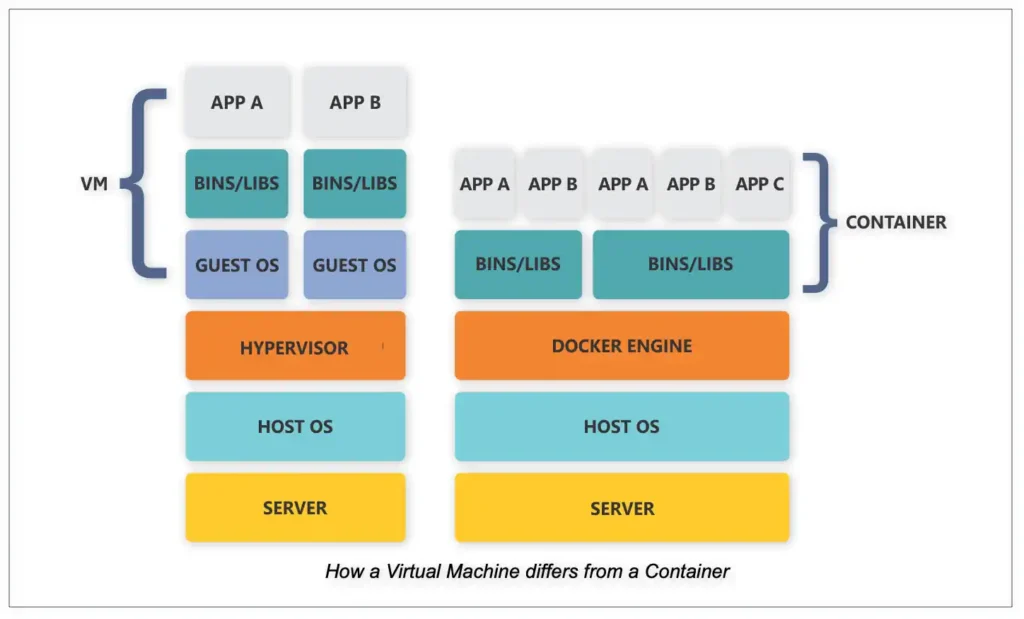

Like many modern data centre solutions Smart Management Frameworks (SMF) has traditionally run on a virtual machine (VM). VMs are widely used because of their flexibility and cost-effectiveness. You can deploy virtual machines on pretty much any hardware. They allow for reduced overhead, with multiple systems operating simultaneously from a single console on a single physical machine. They are quick to restore after system failures, and can be scaled easily.

Virtual Machines, however, have some disadvantages. The VM itself is just a bare container on top of which you must install the operating system, various different libraries, database software and then the application software. This amounts to quite a lot of setting up to get it working, and is why we packaged SMF as a managed service, to save our customers the hassle of having to set up and monitor the virtual machine.

Containers versus virtual machines

Containers share many characteristics of virtual machines, but they don’t use hypervisors, which are what virtual machines use to simulate the hardware components of a traditional operating system. The only thing that resides within a container is the application itself.

Ease of installation

All we need to do is supply the container with the application and its libraries, etc. pre-installed. The only setup that is required is to capture the metadata of the customer’s environment and configure it to talk to their Netezza systems rather than setting up the operating system and system software separately. This means that it’s easy for customers to deploy the container themselves, if they want to, whereas there is a lot more work involved with a virtual machine.

Automatic monitoring and restarting

Virtual machines must be constantly managed and monitored either by the data centre or by the managed service provider. If a VM goes down for whatever reason you have to detect that and start up a new one, perhaps in an alternative location if it’s a hardware failure, and performing regular backups, etc. is no insignificant overhead. Containers, however, are typically managed by orchestration software such as Kubernetes and OpenShift, whose purpose it is to detect if a container fails and then automatically spin up a new one somewhere else. So rather than having a virtual machine running on a physical piece of hardware, with Kubernetes and OpenShift you can run multiple containers in multiple different locations simultaneously. This reduces the management overhead and consequently the cost of ownership, which is why they are becoming so popular.

High availability

SMF is primarily a disaster recovery solution and if the thing that is responsible for doing the disaster recovery were to die, the whole disaster recovery mechanism would fail if nobody detected that and fixed it before a disaster occurred. So, thanks to the orchestration provided by OpenShift or Kubernetes, we can automate this and the customer benefits with a 99.99% uptime.

Ease of deployment to the Cloud

Installing a VM in a cloud-based environment is possible but it requires even more setup and configuration than what’s required on-prem as you also must deal with the cloud provisioning. Containers, on the other hand, are designed to be cloud-native so they can be easily deployed in a cloud OpenShift or cloud Kubernetes environment and can run there more or less seamlessly. They are also cloud service-provider agnostic so whether you’re using Amazon Web Services, Microsoft Azure, Google Cloud or IBM Cloud, you can deploy your containers into any of those environments.

Supportability

Having flexibility in deployment and resilience through the orchestration layer are not the only reasons for moving to containers. There are benefits from both the customer’s and the support perspective. The customer may want to upgrade or apply patches to their operating system or install their own custom software, but if our software stops working, it’s hard to determine whether it is our fault or a problem introduced by the customer. With a container, however, it’s all completely self-contained and entirely under our control so if something breaks it’s much easier to determine where the problem lies and fix it.

Which container to use?

For SMF, the process of containerization revolves around two competing formats – Docker and Podman. Docker has been around the longest and although it doesn’t suit everybody, it’s widely supported on a lot of different platforms and it works very well with Kubernetes. Podman is the container format that is native to OpenShift, which IBM acquired when it bought Red Hat in 2019.

OpenShift has a lot of similarities to Kubernetes, and also supports Docker. We find ourselves, however, in a similar situation to when VHS competed with Betamax in that one format will probably eventually prevail, but you must support both. SMF can run either inside a Docker container or a Red Hat pod and be managed either by Kubernetes or OpenShift. However, IBM’s Cloud Pak for Data comes with a base node that runs OpenShift. So, it is more advantageous to be able to deploy SMF in a Podman container on the base node under OpenShift management, as can the Watson Analytics Platform, than to run a Docker container as a non-native application. This way deployment to the Cloud Pak for Data System would be simple and customers wouldn’t need to install any other software for SMF to work seamlessly.

Considerations when containerizing SMF

Our experience of containerizing SMF would be shared by other solutions providers. We support both Docker and Podman container formats to give the maximum flexibility and choice to our customers. The commands are very similar for creating a container in either format, so it’s not that big or complicated an engineering task to manufacture either type of container once you’ve cracked it. One of the main advantages of containerization is supportability because the software is ‘baked’ into it and is immutable. That can, however, be a problem if the software writes to a database, as SMF does. By design SMF must constantly capture metadata from the customer environment to keep track of things such as when the last backup or restore process was done, if anything failed, what the schedule is, and so forth. So, what we have done with our containers is to put all the core software inside the container and then, with the use of tablespaces, we’ve created a shared file system outside of the container where the database can write to and read from. This allows us to deploy the same container to any customer even though each customer’s metadata will be specific and unique to them.

Leveraging your physical Netezza equipment in a Cloud-centric environment

On a final note, customers who have a hybrid or on-premises Netezza environment may be asking themselves how they can continue to leverage their physical equipment whilst leveraging some cloud capabilities. How can they do that in a consistent reliable robust way that doesn’t break anything? Containers provide one way of achieving this. So, for example, if the customer has a single on-premises Netezza system they can now have a DR system in the cloud. The containerization of SMF means that we can have one SMF container on-premises and one SMF container on the cloud and replicate between the two environments completely seamlessly.

If you’d like to find out more about SMF for CP4D please contact us.

Or, if you’d like to find out more about Kubernetes and OpenShift, check out our FAQs on our website.