“Migrate with One Command”, “Be production-ready in a day”, “Seamless Data Migration” – these are real statements from major data warehousing vendors. They imply that you can experience an effortless data migration to their platform. These claims are compelling, but is there really such a thing as an easy data migration? Based on our experience, data migrations are not easy and definitely not effortless. Sure, the mechanics of moving data from one environment to another have been streamlined by increasingly sophisticated tools, but the physical movement of data is really only a tiny part of a data migration project. It’s like moving house. The truck carrying your stuff may be new, with the latest gadgets for efficient loading and a state of the arts driver’s cabin for a smooth ride, but as we all know there is a lot more to a move than that.

No two data migrations are the same

Most businesses will have highly customised environments and data to migrate. If you are upgrading, say, from a Netezza  Twinfin to an IBM Performance Server, you would expect that the new platform would be largely compatible with the old. If, however, you are migrating between different technologies such as from Teradata to Yellowbrick, it is a completely different ballgame. Surprisingly, though, even a like-to-like data migration can be fraught with unexpected perils, as we will explain later. Let’s focus initially though on a data migration between different technologies.

Twinfin to an IBM Performance Server, you would expect that the new platform would be largely compatible with the old. If, however, you are migrating between different technologies such as from Teradata to Yellowbrick, it is a completely different ballgame. Surprisingly, though, even a like-to-like data migration can be fraught with unexpected perils, as we will explain later. Let’s focus initially though on a data migration between different technologies.

Refreshing your data warehouse environment

If you have chosen to refresh your data warehouse with a different technology, you will be doing so because of the expected gains in performance and functionality. It is almost inevitable then that there will be significant differences between the source and the target technologies and products. These differences must all be catered for during the data migration.

Migrating your database objects

The first big task is to migrate all the objects. Some examples of database objects include tables, views, clusters, sequences, indexes, and synonyms. All of these objects would have been created and maintained using data description language (DDL) that is specific to that environment. Although many DDL’s share a common syntax, there still are quite a few differences between them particularly when they create or modify objects that are peculiar to that environment. The syntax that’s supported on the target platform for creating objects may not be identical to that on the source system. As such, the existing object definitions will need to be translated in order that they will result in an equivalent object structure in the target environment. This could be as simple as changing a ‘DISTRIBUTE ON’ clause to a ‘DISTRIBUTED BY’ clause. It could, however, be more complicated. One product may limit the number of columns that you can use in a ‘PARTITION BY’ or ‘DISTRIBUTE BY’ type clause. You may have to add a heuristic rule to reduce the number of columns that get included in that clause. There may also be other types of physical constraints that are part of the created object on the source without an equivalent in the target. Netezza, for example, has an ‘organize by’ clause in a create table statement that may not exist in the target database.

Differing data types

There may be different data types between the source and the target databases. On the source system, for example, you may have a VARCHAR field that is too long to fit into the target database. In this case, you would need to convert that VARCHAR field into a different data type in order for that object to fit. Date fields can be problematic in the way they are represented and may need to be manipulated during the migration process in order for the target environment to correctly interpret them.

Naming conventions

Another complication can be naming conventions. Some databases have limitations or restrictions on how long the name for a table or a column can be. Some products may insist on use of schema names to prefix table names. Others may not support schemas at all, or as in the case of DB2 only permit a single database, meaning that the concept of having a separate production and development database isn’t supported. We came across this problem in a recent data migration whereby we had to rename half the tables and shoehorn all of the objects into a single database, whereas in the source system they existed in two.

Reserved words

Some systems have reserved words that you are not permitted to use as part of a column name. So, for example, you can have a column called currency in one database but if currency is a data type in the target database you’ll be prevented from using the word currency because it is a reserved word.

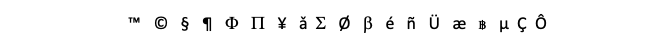

Special characters

The data itself may contain special characters or characteristics that are not supported by the new system, such as European character sets with accents, umlauts, cedillas, acutes, graves, etc which can sometimes be lost as part of the translation process.

Scalability

Whilst none of the above is particularly complex, you need to be able to cater for them at scale. If you have thousands of objects, you don’t want to be manually entering and altering each individual piece of DDL by hand. That can be extremely time consuming and prone to error. Similarly, with data interpretation you would not want to be writing individual scripts for every scenario, rather to be able to manipulate the data automatically during the migration process.

Data Validation

Data validation is probably the most difficult task as well as often being the most neglected. It is essential that the migrated data matches the source data exactly. It is a dangerous assumption that the absence of errors or load failures is proof of a successful migration. Data may be omitted, truncated or misinterpreted without the target system even noticing. Furthermore, relying on end users to spot data discrepancies is a very risky strategy.

So let’s now think about the things that can complicate even a like-to-like data migration.

Users, Groups and Permissions

In addition to ensuring that database permissions are correctly migrated, customers who use Active Directory or LDAP to integrate single sign-on password authentication with database credentials and logins will have to do rigorous testing to ensure that that authentication method works consistently in the new environment as it did in the old, even if they are migrating to similar technologies.

User Defined Functions and Stored Procedures

Many customers have code embedded within their database in the form of stored procedures or user defined functions and these will typically need to be rewritten and migrated from the old environment to the new. This may or may not be possible depending on what the capabilities are of the new target environment. So for example on Netezza, when you are migrating from old to new something that is not made very clear in the documentation is that Netezza Performance Server is 64-bit whereas the old environment is 32-bit, which means that all your user defined functions have to be recompiled. Customers that rely heavily on these have to make sure they allocate time, budget and resources to this task otherwise the system won’t be useable.

Not-so compatibility

Buyer beware, that’s what we know about buying a second hand car, and you wouldn’t expect that to also be the case with state of the art data warehousing technology. The fact is that claiming compatibility between technologies is something that cannot be guaranteed. We recently had a customer who had been promised 98-99% compatibility by the vendor but in reality they found that they had to rewrite a lot of their ETL code and this blew out their budget and schedule. We’re not singling out any particular vendor by the way – such issues always arise no matter which are the source and target systems.

Business Intelligence Layer

Continuing the theme of compatibility, if you are using Business Objects or Tableau or any reporting tool for that matter, you need to test that all the queries work in the new environment. This may involve, for example, where you have changed schema names in order to fit with constraints in the new technology, or where data types have changed.

Backup and Recovery

Don’t assume that in-house processes and procedures such as backup and recovery will run without requiring some conversion work. There may be fundamental differences in approach between two environments. IBM Integrated Analytics System (IIAS) was supposed to be 98% compatible with Netezza, and yet it does not support incremental backups like Netezza does. Having to do a full backup once a week instead of using incremental backups is actually quite a big thing. File compression on DB2 is also inferior to that on Netezza, and so the resulting file sizes are much larger.

Non-functional Requirements

Your data migration isn’t finished until you are operational on your new platform and it’s a great disappointment if its performance does not live up to the promises made by the vendor. Your data migration plan has to include capacity and performance testing to ensure that not only does all the data physically fit and is consistent and correct, but also that your non-functional requirements such as performance, response times, concurrency and workloads all behave in an acceptable way as well.

In summary, whereas a data migration may be trivialised by the marketing spin it is really important to anticipate all the potential hurdles that get in the way of a smooth transition to a new platform. We’ve discussed how different technologies handle data differently, how the data itself has to be manipulated to fit into a new environment, and how important it is to validate your data and all of the non-functional requirements in the new system.

In our next blog, we will continue this discussion and show you how to do a Smart data migration with Smart Data Frameworks.

In the meantime, if you want to talk to us about how to help you with your data migration, why not contact us or view more insights here.